User testing averages for November 2020

Last month we shared a short overview of some platform statistics and insight for October. Something we pointed out is that studies and sessions are becoming shorter by the number of tasks they include. Also, the average payout had been growing for a couple of months as well.

In this short blog post, we’ll share some user testing averages for November, comparing them with the previous month. All statistics come from our platform.

Studies and sessions

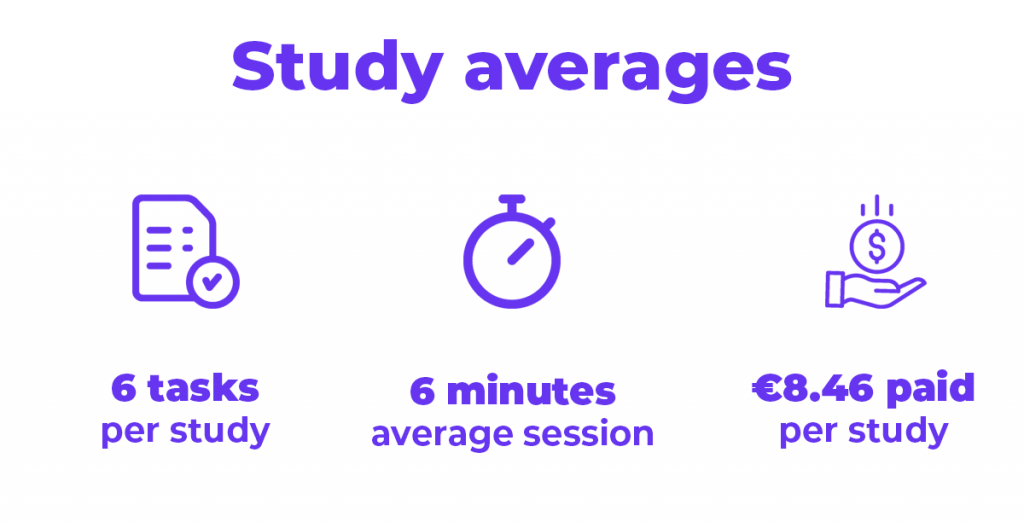

In our October review, we pointed out that sessions durations have become shorter since August, where the average duration was about 20 minutes. In October, it was 8 minutes. Quite a huge leap in terms of session times. November brought the average session duration down to 6 minutes.

In November, there were about 6 tasks per study. Managers are also opting to hire testers as opposed to using their own network of test users. 60% of studies are started with recruitment in mind, in October it was 50%. Managers are looking for new participants and target accordingly.

The reason why test creators are looking more and more into recruitment is the fact that new testers are not biased. Familiar test users from your own network have prior experience with participating in your studies. They are also familiar with the products you have designed or are designing at the moment. So there could be a bias towards the product being tested.

The average payout for these sessions had a small nudge upwards as well: from €8.25 in October to €8.46 in November. Even though this is not a big increase, it is still significant in the context of average session durations. We can loosely say that an average tester earns €8.46 for a 6-minute session. Seems like a good deal to us.

Testers

Something we haven’t touched on before is the number of rejected sessions we have. If you are out of the loop, we’ve added an option for managers to reject any unwanted sessions from studies. This creates an example for all test participants to give their 100%. The idea behind rejected sessions is to prepare test participants to give thorough answers and leave better insight for researchers. Not only do test creators benefit from high-grade feedback, but testers become more useful in leaving that feedback as well.

There were only 3 rejected sessions in November, compared to 17 in October. The number has dropped thanks to the initial response managers gave to bad sessions – they simply rejected sessions and testers had to give more effort in their next session. Cancelled sessions have dropped as well. Participants are taking their time to complete the tasks. In both October and November, we saw no cancelled sessions.

This also means that we can offer a 100% answer rate and quality answers. Because of these options to reject sessions that are below standard, we want participants to be more thorough with their answers. Testers should be ready to give high-quality answers.

Final notes

These are some of the user testing averages for November and October. If you are interested in getting some more site-wide numbers, feel free to contact us at info@sharewell.eu